Intelligent design without a creator? Why evolution may be smarter than we thought

by Richard A Watson

27 February 2017

According to creationists, the eyes of the great horned owl cannot be explained by Darwinian evolution

Charles Darwin’s theory of evolution offers an explanation for why biological organisms seem so well designed to live on our planet. This process is typically described as ‘unintelligent’ – based on random variations with no direction. But despite its success, some oppose this theory because they don’t believe living things can evolve in increments. Something as complex as the eye of an animal, they argue, must be the product of an intelligent creator.

I don’t think invoking a supernatural creator can ever be a scientifically useful explanation. But what about intelligence that isn’t supernatural? Our new results, based on computer modelling, link evolutionary processes to the principles of learning and intelligent problem solving – without involving any higher powers. This suggests that, although evolution may have started off blind, with a couple of billion years of experience it has got smarter.

What is intelligence?

Intelligence can be many things, but sometimes it’s nothing more than looking at a problem from the right angle. Finding an intelligent solution can be just about recognizing that something you assumed to be a constant might be variable (like the orientation of the paper in the image below). It can also be about approaching a problem with the right building blocks.

With good building blocks (for example triangles) it’s easy to find a combination of steps (folds) that solves the problem by incremental improvement (each fold covers more picture). But with bad building blocks (folds that create long thin rectangles) a complete solution is impossible.

Looking at a problem from the right angle makes it easy

In humans, the ability to approach a problem with an appropriate set of building blocks comes from experience – because we learn. But until now we have believed that evolution by natural selection can’t learn; it simply plods on, banging away relentlessly with the same random-variation ‘hammer’, incrementally accumulating changes when they happen to be beneficial.

The evolution of evolvability

In computer science we use algorithms, such as those modelling neural networks in the brain, to understand how learning works. Learning isn’t intrinsically mysterious; we can get machines to do it with step by step algorithms. Such machine learning algorithms are a well-understood part of artificial intelligence. In a neural network, learning involves adjusting the connections between neurons (stronger or weaker) in the direction that maximizes rewards. With simple methods like this it is possible to get neural networks to not just solve problems, but to get better at solving problems over time.

But what about evolution, can it get better at evolving over time? The idea is known as the evolution of evolvability. Evolvability, simply the ability to evolve, depends on appropriate variation, selection and heredity – Darwin’s cornerstones. Interestingly, all of these components can be altered by past evolution, meaning past evolution can change the way that future evolution operates.

For example, random genetic variation can make a limb of an animal longer or shorter, but it can also change whether forelimbs and hindlimbs change independently or in a correlated manner. Such changes alter the building blocks available to future evolution. If past selection has shaped these building blocks well, it can make solving new problems look easy – easy enough to solve with incremental improvement. For example, if limb lengths have evolved to change independently, evolving increased height will require multiple changes (affecting each limb) and intermediate stages may be worse off. But if changes are correlated, individual changes might be beneficial.

The idea of the evolution of evolvability has been around for some time, but the detailed application of learning theory is beginning to give this area a much-needed theoretical foundation.

Gene networks evolve like neural networks learn

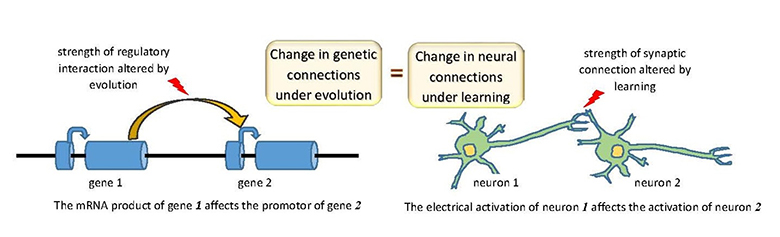

Our work shows that the evolution of regulatory connections between genes, which govern how genes are expressed in our cells, has the same learning capabilities as neural networks. In other words, gene networks evolve like neural networks learn. While connections in neural networks change in the direction that maximizes rewards, natural selection changes genetic connections in the direction that increases fitness. The ability to learn is not itself something that needs to be designed – it is an inevitable product of random variation and selection when acting on connections.

The exciting implication of this is that evolution can evolve to get better at evolving in exactly the same way that a neural network can learn to be a better problem solver with experience. The intelligent bit is not explicit ‘thinking ahead’ (or anything else un-Darwinian); it is the evolution of connections that allow it to solve new problems without looking ahead.

So, when an evolutionary task we guessed would be difficult (such as producing the eye) turns out to be possible with incremental improvement, instead of concluding that dumb evolution was sufficient after all, we might recognize that evolution was very smart to have found building blocks that make the problem look so easy.

Interestingly, Alfred Russel Wallace (who suggested a theory of natural selection at the same time as Darwin) later used the term ‘intelligent evolution’ to argue for divine intervention in the trajectory of evolutionary processes. If the formal link between learning and evolution continues to expand, the same term could become used to imply the opposite.

This post was originally published in The Conversation, 28 January 2016